Categories

Portable Security with Your Own AI Gatekeeper

A new AI is about to be stuffed into your pocket. Or, to be more accurate, a lot more AIs.

As of mid-2023, Large Language Models such as GPT are huge. GPT-3, released in 2020, has more than 175 billion parameters, or simulated neural links, and has been developed on thousands and thousands of specialized GPUs. Training it cost more than $4 million, using as much electricity as a typical Dutch family uses in nine years. GPT-4, released in March, is nearly ten times the size, with a trillion parameters and an estimated development cost of $100 million. The models are big and getting bigger.

Until recently. In 2022, developers at Google’s Deepmind developed their Chinchilla model with only 70 billion parameters, yet it outperforms GPT-3 on many tests. Meta’s Llama, which uses 65 billion parameters, has enabled development of even leaner models, including Stanford’s Alpaca and the University of Washington’s Guanaco. Fine-tuning Alpaca took just 3 hours on basic GPUs, at a cost of under $100.

This new efficiency comes from three avenues of development. The first is more flexibility in training and output. Longer training times reduce the computation load, and rounding numerals carried to several decimals dramatically eases hardware requirements with “negligible accuracy degradation,” according to Austrian researchers. A second improvement comes from more efficient use of code, particularly through “attention algorithms” that tell LLMs to prioritize patterns that arrive at similar results with fewer computational demands. And, finally, dedicated AI-intensive hardware outperforms traditional GPUs for AI-specific applications; Google, Meta, and Meta each run AI on chips of their proprietary design.

The result is that LLMs and associated models are actually getting smaller in many cases, in a trend that may make it possible for them to run on far smaller devices. While phones can currently access AIs developed and run on larger servers, the new trend may make it possible to build customized models on a phone: you can have your own, unique assistant in your pocket.

The Trouble with Multiplying AIs

New sophisticated software brings new security challenges. Given the extensive capabilities of AI, the challenges will multiply as quickly as the models. How can you keep your phone – and everything on it – safe from these emerging threats?

Ideally, each AI would have a bullet-proof set of security protocols, preventing unwarranted actions and rejecting inappropriate output. The best-known LLMs constantly adapt either specific guardrails, like the limitations to output developed for ChatGPT, or broader guidance, like the “constitutional AI” principles of Anthropic’s Claude. Both aim to prevent harmful output, and in a perfect world, newly developed AIs would follow a similar mandate to employ such protections with increasing effectiveness.

But as AIs proliferate, so do the risks. Any new application may lack the constraints of existing ones, and once you introduce customization, the protections may become as varied as the models themselves. The more your new automated assistant can do, the more harm it could cause.

One good way to limit that threat is to separate risk-prevention from the other tasks given to a new AI. If users can employ a trusted, robust set of protections to any new AI, they need to worry less about flaws inherent to the new application. Those protections can act as a final check against illicit output and actions, rejecting any that stray beyond appropriate limits. In effect, you have a portable gatekeeper that you can attach to any new model, in addition to any the guardrails already in place.

To see how this can work, imagine a basic protocol that aims to prevent, say, any output recommending self-harm by the user. Now that phones can house a basic AI, the “gatekeeper” can check any output at a level well beyond looking for keywords; it can actually parse text for implied malice, emotional menace, and harmful advice. Bolt it on to a new AI application, and even harms that get through guardrails of the new model will be captured by the gatekeeper before they are passed on to the user. Those same protocols can be applied to many different models, each with their specialized intent. Many models, one effective gatekeeper.

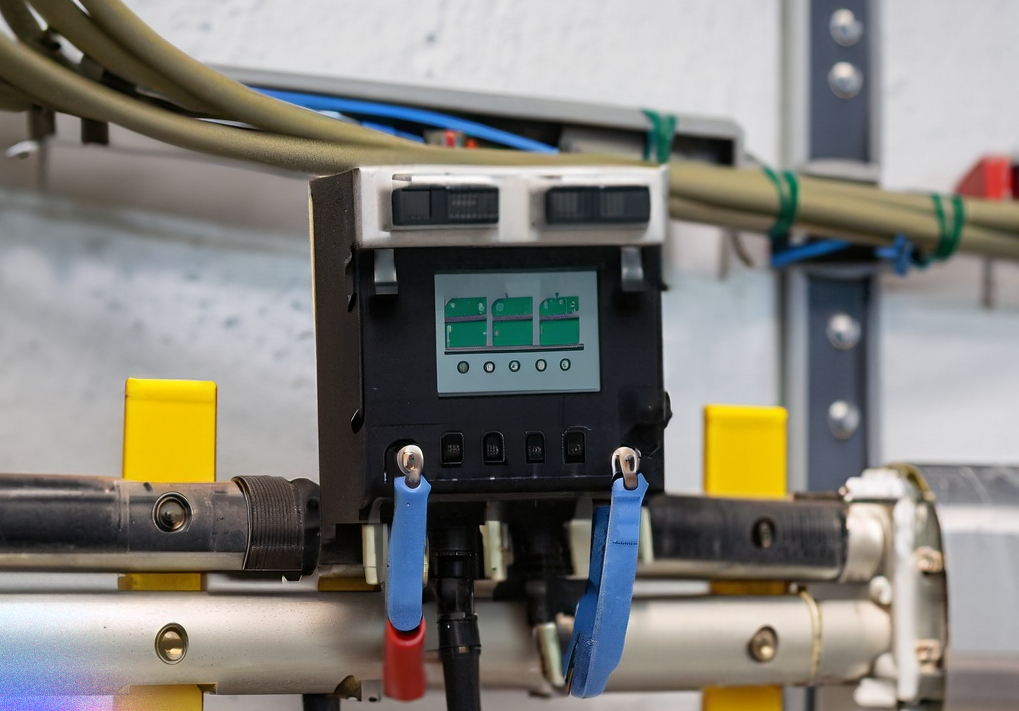

Integration with a PathGuard module prevents remote hacking of essential devices.

A Gatekeeper For Business and Mission-Critical Devices

A software-driven gatekeeper may be less-than-ideal for businesses or agencies with mission-critical devices. Cybersecurity, like all security, offers tradeoffs between convenience and safety, and while an easily-programmable gatekeeper might suit phone users who regularly install and update apps, security-minded organizations may want a higher level of protection. PathGuard can help.

With hardware-enforced protocols that can be changed only locally, PathGuard modules can ensure that only appropriate instructions reach a protected device. If a given Programmable Logic Controllers adjusts the level of sodium hydroxide in a water system, for instance, operators can choose a safe operating range for those values and set them through local, physical access to the PathGuard module. Any hacking attempt to exceed those values would never reach the PLC: the PathGuard module would reject them as inappropriate and refuse to relay the instruction. Distant hackers can’t alters the protocols, since they can be configured only through a local port.

Such protection is ideally suited to AI applications. Generative AI applications produce probabilistic output, rather than deterministic output, so organizations can’t know in advance exactly how they will respond to given input. Firms and agencies may be understandably troubled by the inability to know exactly what instructions AI applications will produce, but with PathGuard, they can be sure that only instructions within an approved range, or class or category, will be passed to the protected device. And since those safety measures can’t be changed from afar, they can rely on local operators to set and change protocols as needed.